(Portuguese translation, courtesy of Artur Weber)

This post is a distillation of some current thoughts on game preservation (extending to software preservation) that arose from a presentation I gave at Stanford two weeks ago. Video of that talk is here. The discussion in this post is a little more advanced and focuses mainly on the last 10-15 minutes of the talk. I have also posted a link to another presentation I gave at the Netherlands Institute for Sound and Vision in February. This earlier one is exclusively about the issues with standard game preservation. If you are unfamiliar with this whole topic, definitely check it out.

TLDR; The current preservation practices we use for games and software need to be significantly reconsidered when taking into account the current conditions of modern computer games. Below I elaborate on the standard model of game preservation, and what I’m referring to as “network-contingent” experiences. These network-contingent games are now the predominant form of the medium and add significant complexity to the task of preserving the “playable” historical record. Unless there is a general awareness of this problem with the future of history, we might lose a lot more than anyone is expecting. Furthermore, we are already in the midst of this issue, and I think we need to stop pushing off a larger discussion of it.

The standard model of game preservation

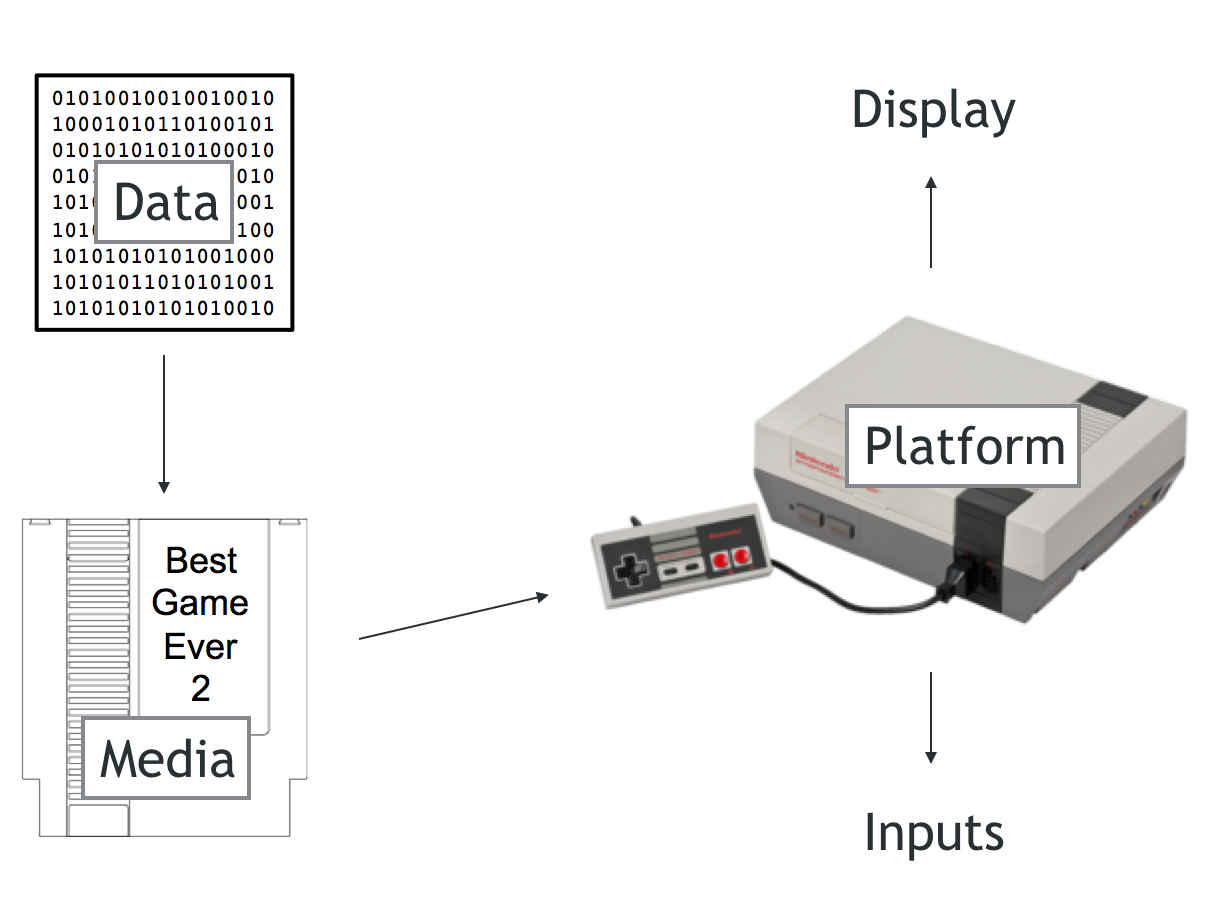

Any preservation activity must first decide on its scope, on the boundaries of what it considers to be worthy of saving, and on the basic definitions for the objects involved. In game preservation, the standard model for most (not all, as mentioned below) of the work I’ve been involved in resembles the image below.

There are essentially three major areas of interest: the physical extent of the game, the data stored on it, and the hardware necessary to run it. Briefly, the focus of a good deal of preservation effort is on the issues associated with accurately extracting data from physical media and maintaining both the physical media and hardware. For the former, there is an assumption that the data extracted from physical media can be run through the use of an emulator. This is a piece of software designed to take in old game data and make it playable on a modern machine. We are in pretty good shape with the emulation of the “major” computer systems and consoles released pre-2005 or so. (I have “major” in quotes because most emulation effort is directed towards the more popular platforms, with commercial success being the predominant criteria for selection as opposed to technical innovation, gameplay advances, or any other suitable historical metric.) The MAME project, with its new open source licensing and the inclusion of MESS, provides support for hundreds of systems, some swimmingly so, and others just barely. I’m aware that many other emulation projects exist, that they are highly fragile since many of them are passion projects, and that they suffer a lack of proper institutional backing. But at least there is a chance that if you have the proper game data, you can put it in an emulator and get back some playable experience.

The long term physical maintenance prospects, on the other hand, are not particularly good. Physical media and hardware degrade over time, and without upkeep will simple cease functioning. Data stored on magnetic disks, optical discs, etc. will stop being readable, and so again, there is considerable effort in getting the data off of them. Some of the more popular systems may always be physically playable due to the shear number of units available internationally, and the in-depth community knowledge of their technical specifications. There are numerous new consoles that are designed to play Nintendo Entertainment System games, for example, and maybe future production processes will allow for atomically perfect copies of older hardware. Regardless, both of these scenarios, either wholesale emulation or continuous physical maintenance will not hold up going forward.

This is because the standard model of preservation, and the solutions devised for it do not reflect the current state of game technology or our networked world. The historical object has now changed to a point where is does not reflect the methodologies developed for legacy games and game hardware. When I think of a video game, as an object, I think of the NES in the picture above. It is a singular experience, designed for a single piece of hardware and without any awareness of or dependence on the outside world. The problem is that for people not born in the 1980s, say people born around 2000, the video game, as an object, is a networked application on a mobile phone. Now, I’m not saying that if you were / weren’t born in either of those times then you must have these preconceptions. I’m sure some people born in 2000 totally love the NES. But that the preservation effort that I see in games is for the preservation of the past as seen from the standpoint of those active in game preservation. Those people want to make sure that their past isn’t lost, that you can still find out about and play M.U.L.E. or Escape from Mt. Drash, for the foreseeable future.

This fear of loss is totally understandable; there are other mediums whose early histories are replete with stories of historical devastation. I routinely hear, and think about, the fate of silent film, where a significant percentage of its early productions are permanently lost. Much of the loss is due to a lack of public acceptance of early film as something worth preserving. The modern idea that film is a medium in need of a preservation record took nearly 50 years to fully develop, in which time much was lost. Early games may have been in that same state, but I’m beginning to think that that’s no longer the case. There are extensive historical listings of games available, certain early consoles are nearly totally preserved (in the sense that there is available data from most of their games), and there is constant, sustained community effort to reinforce those records. I’ve come to believe that games (and software) are going to deal with an inverse of the issues confronting early film. Namely,

We are producing objects that are getting more technologically complex, more interdependent, and less accessible. And we are producing them at a rate that dwarfs their previous historical outputs, and that will terminally outpace future preservation efforts.

Future Issues

(As a quick aside, this thinking is based, in part, on the recent survey work of David S. Rosenthal in Emulation and Virtualization as Preservation Strategies and on some ideas from James Newman’s book Best Before. If you would like more insight on the issues with networked emulation see the former. For gameplay preservation and historical considerations of play, check out Newman.)

I don’t think I need to support that games are getting more technologically complex. Major studios are much, much larger then they used to be, and teams for AAA titles are incredibly large. Over 1000 people made GTA V. Around 10 people were responsible for DOOM. I know that modern, independent games have moved development back to smaller teams, but their work is also now dependent on toolsets and technologies that are significantly more complex and sprawling then those of even a decade ago.

That games are more interdependent is also not a controversial position. Most games are now distributed over a network, do not have physical dissemination of any kind, and require some form of network connection for play or updating. This is one of the major problems with future preservation activity. When a system is dependent on multiple parts that are asymmetrically disseminated, reconstructing the object and its played experience become much more difficult. By “asymmetrical dissemination” I mean that the player of a mobile game, like Candy Crush, receives a game client through an app store. That client requires a connection to a foreign game server to deliver the experience, and handle monetary transactions and record keeping. The older model of software preservation cannot deal with this asymmetry of access. If the foreign server turns off at some point in the future, that part of the game system is now, most likely, irrecoverable. If that server is gone, then in all likelihood, the game client will no longer function. This risk is inherent for a majority of current games. Even when the client is not as dependent on a server connection for play, there may be other parts of the system, like updates, automatic patching or mobile platform APIs, that do still require network access.

One way out of this problem is to emulate everything involved in the entire network. Get all the server and client software functioning again through emulation or modification and POW! back comes the game play. While this might be feasible on a technical level, you have the issue that any significantly networked game or activity probably also had a contemporaneous community associated with it, and a social space within the game program itself that cannot be recovered. This is most striking in MMOs, where the game is essentially an operationalization of a social world. If you recover the whole system, you really don’t get back much of the experience. (For more on this, see The Preserving Virtual Worlds Report, and Henry Lowood’s discussion of “perfect capture” (paywalled here).

The major problem with full network emulation is access to the information necessary to recreate a system or network of systems. Most game servers are proprietary, considered corporate secrets, and will never be distributed in a manner that allows for their emulation. That is not to say that with significant, dedicated reverse engineering effort you can’t create something that imitates a private server, you can. As the work on Nostralius shows, you can even get all the way to potential litigation. But the effort required to emulate networked APIs adds another level of complexity to the preservation task. You can no longer just save a game’s data and expect it to function; you need to save its whole network. From a preservation perspective this is an untenable nightmare. The technical burden is now significantly increased along with storage and reproduction requirements.

Another issue with access is the online distribution systems that supply most games. Whether through Steam, Google Play, PSN Network or iOS, we’ve offloaded management of our game data to cloud services and wall gardens. This is problem since most of these services reserve the right to strip access to content at any time, and to update that content without providing access to previous versions. Without dedicated preservation efforts, and an implicit dependence on tools created for piracy, once an application is gone from these services it will be hard (or impossible) to get back. Remember back in 2007, when everyone was using iPhones as light-sabers? Well if you don’t, I can’t show you it since the original application was removed for IP issues and replaced with a different one. Remember when the Undertaker in Hearthstone was like totally broken? Well I really can’t show you that one (as a playable experience) because it was patched out ages ago. There is now an assumption that older versions of things should not be recoverable in a playable way, since the older version is now obviously deficient and should be forgotten. Like how once they colorized Casablanca, we threw out that crummy black and white version.

The most pressing and least mentioned issue is the scale of the problem as it exists today, and how it will continue into the future. I’ve presented two different preservation objects, the first, according to the standard preservation model, is a non-networked program made for a dedicated, non-networked platform. The second is a highly network contingent object that we don’t really have a viable preservation model for. Obviously there are cases in between these two extremes (and have been for most of the history of games), but overly complex straw men risk becoming real and complicating the argument.

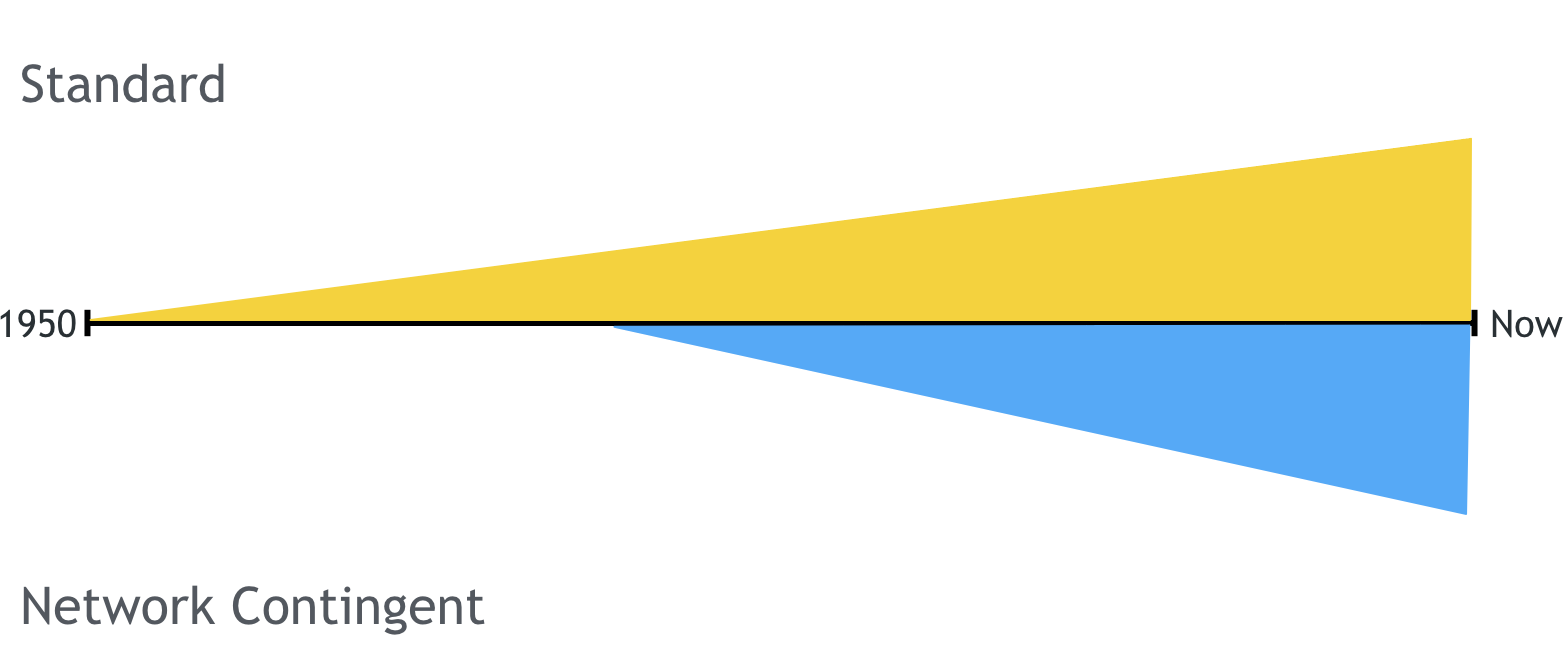

I made a series of graphs to illustrate the problem. (They are highly empirical, as you will see.) The first two are plots of the development of traditional, standard model games vs network contingent ones over the history of games. Until now, or the recent past, the perception has been that things have developed apace. There are a lot of networked games, but also a lot that do not appear to require network access to function (this is probably now untrue, even for singular experiences, but again strawmen!). Both developments are probably following some form of exponential curve, as more of the world gains access to the technical knowledge and tools needed to make games.

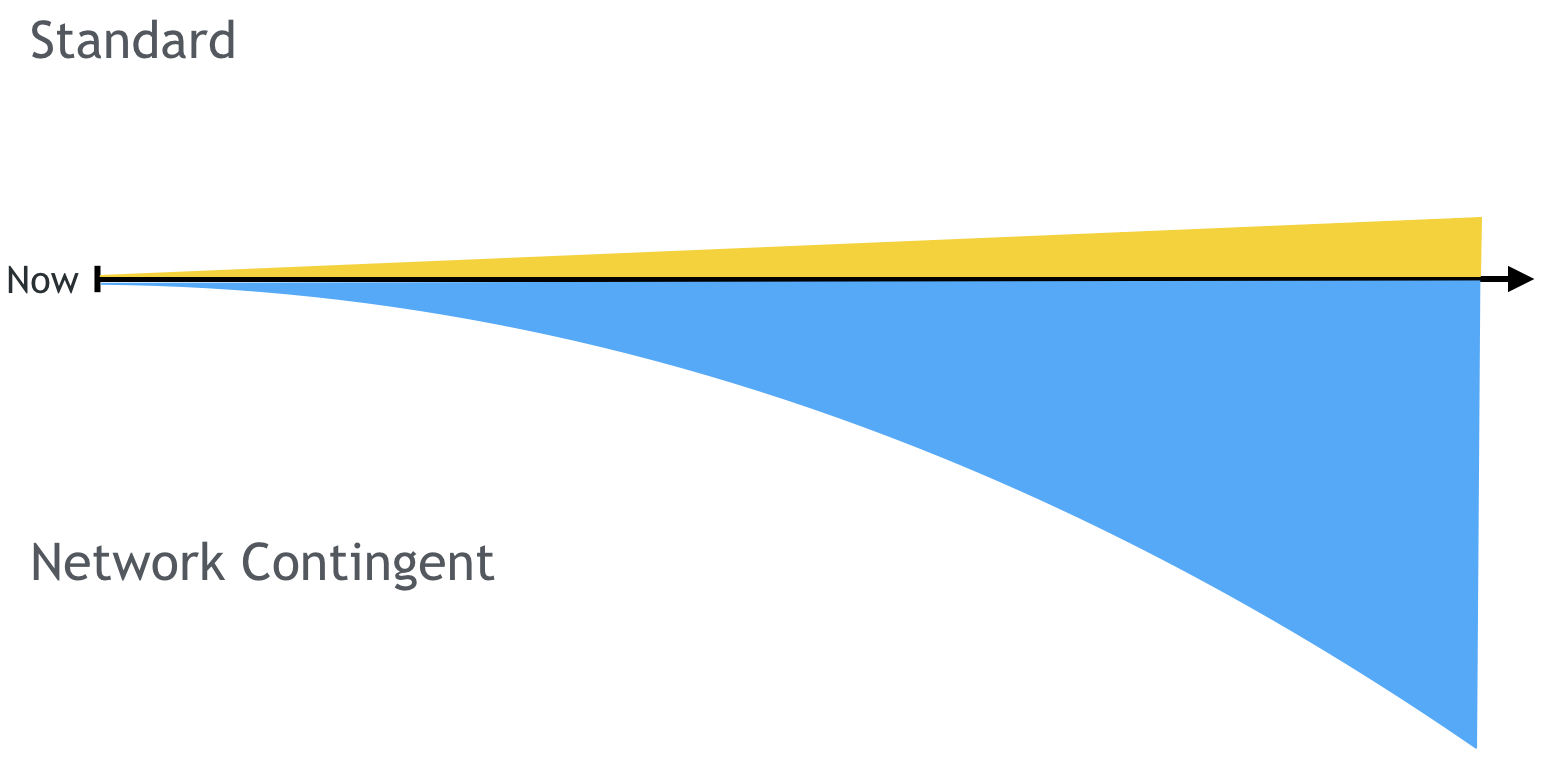

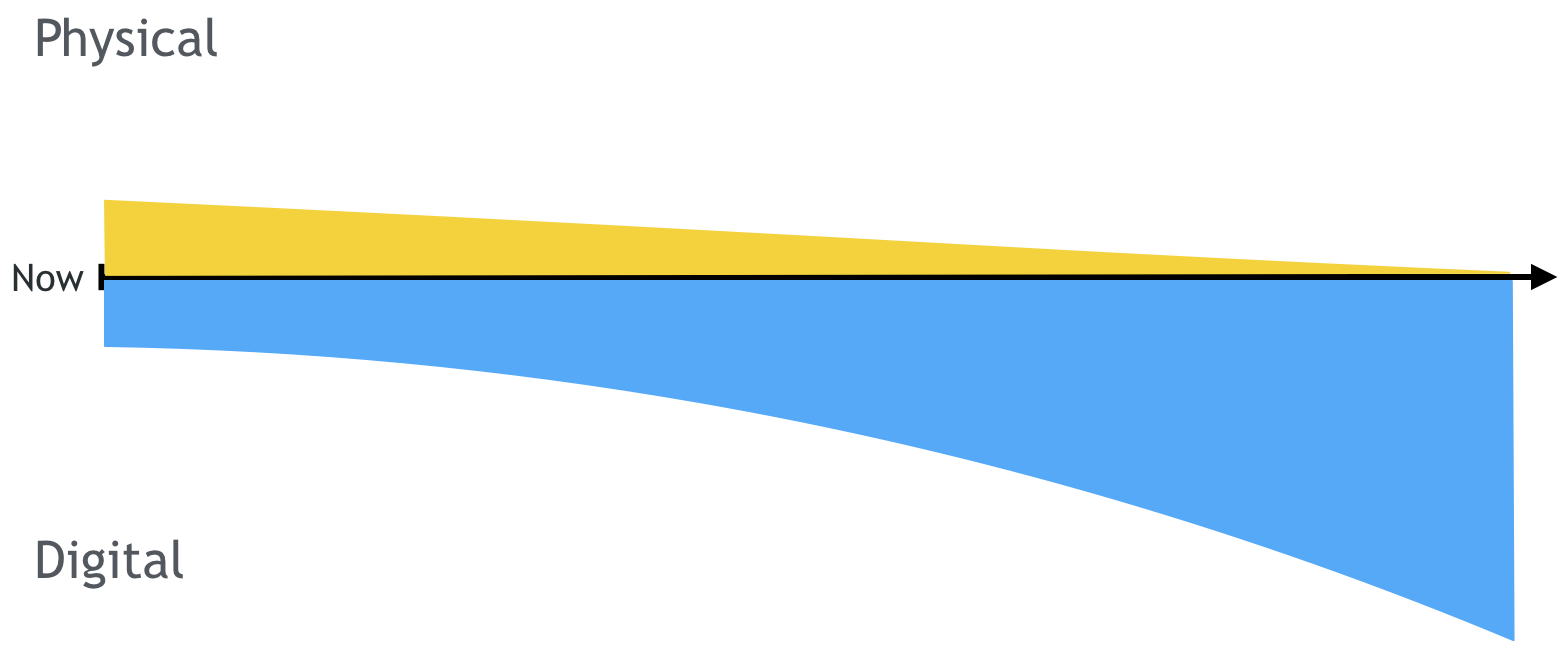

And then into the future:

The issue appears when projecting into the future, since the two curves are definitely not the same. In fact, the vast majority of the games that will be made by humanity going forward will be network contingent. This is a simple reflection of our networked society, and the expectations of networked connections that are now built into human experience from childhood. This issue also pans out when you look at the available (albeit slightly problematic) data.

Mobygames, a website that tries to account for all historical games, lists around 55,000 titles in their database. Of those titles, around 20,000 alone are for some form of the Windows operating system. Steam, the popular PC game distribution service has a total of 9,175 games available for play on the current version of the Windows operating system. So roughly half of all the games created for Windows since its inception as an operating system are currently available for Windows 10, network contingent, and distributed through Steam.

The situation gets a bit more ridiculous when you consider mobile distribution platforms. A majority of the applications available through the iOS App Store are games. According to recent numbers from Pocket Gamer there are over 500,000 games available for download to your iPhone. That is, based on the MobyGames numbers, there is an order of magnitude more games on the App Store, right now, then have been created for the entire history of video games. The scale of production is immense, and all of those games do not fit into the previous considerations of game preservation, they are all network contingent. One response is that most of those games are probably crap, and while that might be true, who’s deciding what’s crap and what’s a “legitimate” game? If some young, future world renowned game designer made a bunch of unpopular apps before hitting the big time, we’d probably want those to be preserved, right?

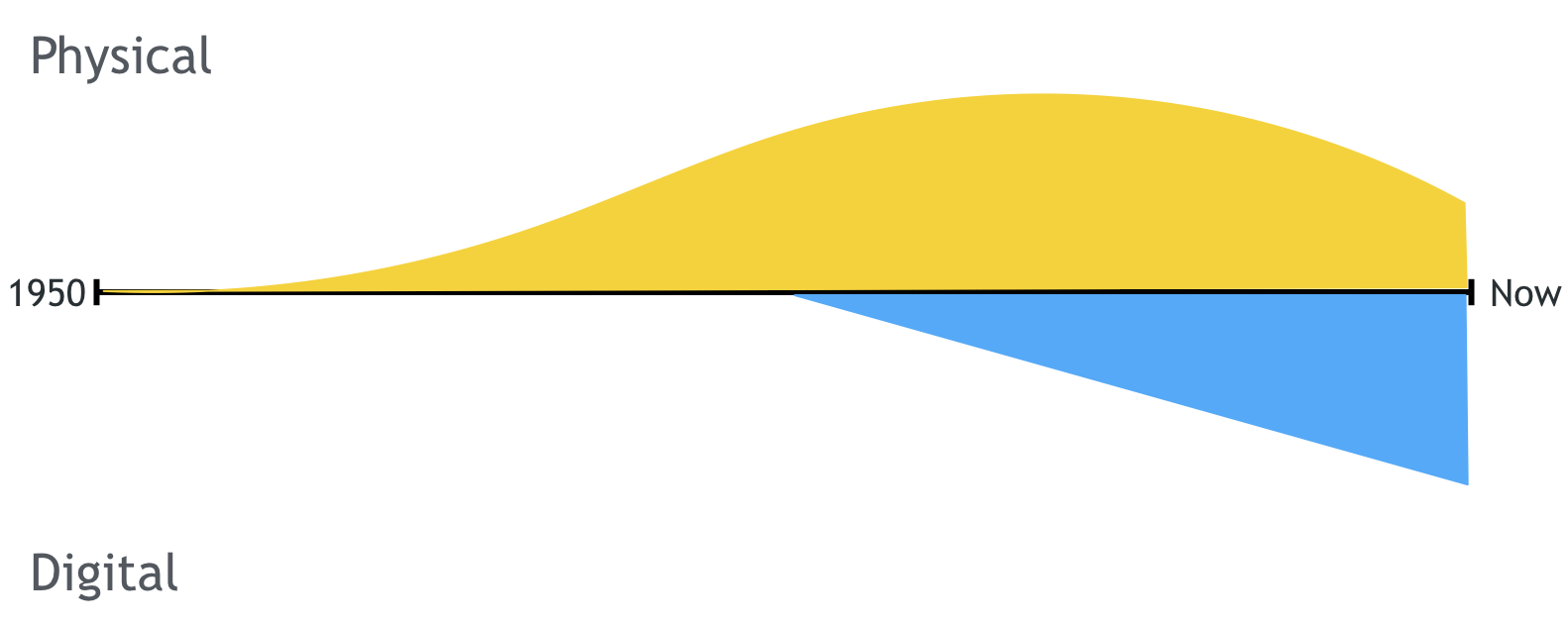

The second two graphs highlight the other important implication, which is that most future games will be disseminated without physical form. I still don’t know of preservation models for network distributed content, and it will soon be the only type of content that is created.

And again, into the future:

The incredible production rates of current games, and the inability to currently preserve them all will lead to a situation where predominantly single player, non-networked games are overrepresented (or in many cases the only representatives) in the playable record. I don’t see a way to actually avoid this situation except to be aware of it. If developers and designers disseminate more information about their development practices, or take on a standardized framework for the free dissemination of historical source code and assets, then those breadcrumbs could help prevent complete loss. You can’t reconstruct an entire loaf from the crumbs, but you can gain a sense of its texture, ingredients, and baking process.

Solutions?

Okay, so the situation is looking pretty dire, what are some ways to combat this looming preservation quagmire? (It’s also not looming, since it’s already here.)

First is to consider what we are trying to save when we preserve video games. What I thought we were trying to save is the ability to play a historical game at some point in the future. What I’m thinking we need to do is to record the act of play itself. This is the position of James Newman (and probably others). To “record the act of play” is to develop dedicated methodologies to save how people today interact with their games. These methods would involve video capture, textual description, and some means to describe that data for future contextualization and recovery. We probably can’t save all the games on the app store in a playable state, but we could probably record a video of some part of them, along with a player describing the experience. This is a way to hedge against total loss, since there will be at least some historical record of the object aside from its name on a list somewhere (if even that). The good news here is that YouTube and Twitch are effectively archiving gameplay everyday. The bad news is that we need means to organize and back up those records, since they will only last as long as Google and Amazon consider them profitable or useful.

Second, maybe get the people creating these things to dedicate a little more time to basic preservation activities. Releasing records of development, production processes, and legacy source code would allow other insights to be made about games, and in some cases allow for their recreation or recovery. I know this is a tall order, since the current corporate culture around all software development is to play close to the chest. It would be nice however, if the best advice archivists could offer game developers wasn’t to just steal things from their workplaces.

Third, there needs to be more societal pressure and motivation to legitimate games as cultural production worth saving, a la film, and to create larger institutional structures that fight for preservation activity. I spend some time at film preservation conferences, and it is fairly common that high-level executives from major Hollywood studios attend, speak and participate. This is partly due to the commercial viability of film preservation, since old films can still stock streaming services. But it’s also due to a culture in the film industry itself that understands and appreciates its history, the importance of maintaining that history, and the societal benefits that come from preserving cultural heritage. It would be great for the games industry to realize that as well.

Lastly, everything I’ve discussed regarding game software extends to software in general. And in that area, I think things may be even worse. Games at least form a semi-coherent class of software that can be framed as a cultural production worth saving. I don’t see the same consideration for office software, embedded systems or mobile applications and that’s a shame.